Originally published on the Just Eat Takeaway Engineering Blog.

How Just Eat Takeaway.com leverage AWS, Packer, Terraform and GitHub Actions to manage a CI stack of macOS runners.

Problem

At Just Eat Takeaway.com (JET), our journey through continuous integration (CI) reflects a landscape of innovation and adaptation. Historically, JET’s multiple iOS teams operated independently, each employing their distinct CI solutions.

The original Just Eat iOS and Android teams had pioneered an in-house CI solution anchored in Jenkins. This setup, detailed in our 2021 article, served as the backbone of our CI practices up until 2020. It was during this period that the iOS team initiated a pivotal migration: moving from in-house Mac Pros and Mac Minis to AWS EC2 macOS instances.

Fast forward to 2023, a significant transition occurred within our Continuous Delivery Engineering (CDE) Platform Engineering team. The decision to adopt GitHub Actions company-wide has marked the end of our reliance on Jenkins while other teams are in the process of migrating away from solutions such as CircleCI and GitLab CI. This transition represented a fundamental shift in our CI philosophy. By moving away from Jenkins, we eliminated the need to maintain an instance for the Jenkins server and the complexities of managing how agents connected to it. Our focus then shifted to transforming our Jenkins pipelines into GitHub Actions workflows.

This transformation extended beyond mere tool adoption. Our primary goal was to ensure that our macOS instances were not only scalable but also configured in code. We therefore enhanced our global CI practices and set standards across the entire company.

Desired state of CI

As we embarked on our journey to refine and elevate our CI process, we envisioned a state-of-the-art CI system. Our goals were ambitious yet clear, focusing on scalability, automation, and efficiency. At the time of implementing the system, no other player in the industry seemed to have implemented the complete solution we envisioned.

Below is a summary of our desired CI state:

- Instance setup in code: One primary objective was to enable the definition of the setup of the instances entirely in code. This includes specifying macOS version, Xcode version, Ruby version, and other crucial configurations. For this purpose, the HashiCorp tool Packer, emerged once again as an ideal solution, offering the flexibility and precision we required.

- IaC (Infrastructure as Code) for macOS instances: To define the infrastructure of our fleet of macOS instances, we leaned towards Terraform, another HashiCorp tool. Terraform provided us with the capability to not only deploy but also to scale and migrate our infrastructure seamlessly, crucially maintaining its state.

- Auto and Manual Scaling: We wanted the ability to dynamically create CI runners based on demand, ensuring that resources were optimally utilized and available when needed. To optimize resource utilization, especially during off-peak hours, we desired an autoscaling feature. Scaling down our CI runners on weekends when developer activity is minimal was critical to be cost-effective.

- Automated Connection to GitHub Actions: We aimed for the instances to automatically connect to GitHub Actions as runners upon deployment. This automation was crucial in eliminating manual interventions via SSH or VNC.

- Multi-Team Use: Our vision included CI runners that could be easily used by multiple teams across different time zones. This would not only maximize the utility of our infrastructure but also encourage reuse and standardization.

- Centralized Management via GitHub Actions: To further streamline our CI processes, we intended to run all tasks through GitHub Actions workflows. This approach would allow the teams to self-serve and alleviate the need for developers to use Docker or maintain local environments.

Getting to the desired state was a journey that presented multiple challenges and constant adjustments to make sure we could migrate smoothly to a new system.

Instance setup in code

We implemented the desired configuration with Packer leveraging a number of Shell Provisioners and variables to configure the instance. Here are some of the configuration steps:

- Set user password (to allow remote desktop access)

- Resize the partition to use all the space available on the EBS volume

- Start the Apple Remote Desktop agent and enable remote desktop access

- Update Brew & Install Brew packages

- Install CloudWatch agent

- Install rbenv/Ruby/bundler

- Install Xcode versions

- Install GitHub Actions actions-runner

- Copy scripts to connect to GitHub Actions as a runner

- Copy daemon to start the GitHub Actions self-hosted runner as a service

- Set macos-init modules to perform provisioning of the first launch

While the steps above are naturally configuration steps to perform when creating the AMI, the macos-init modules include steps to perform once the instance becomes available.

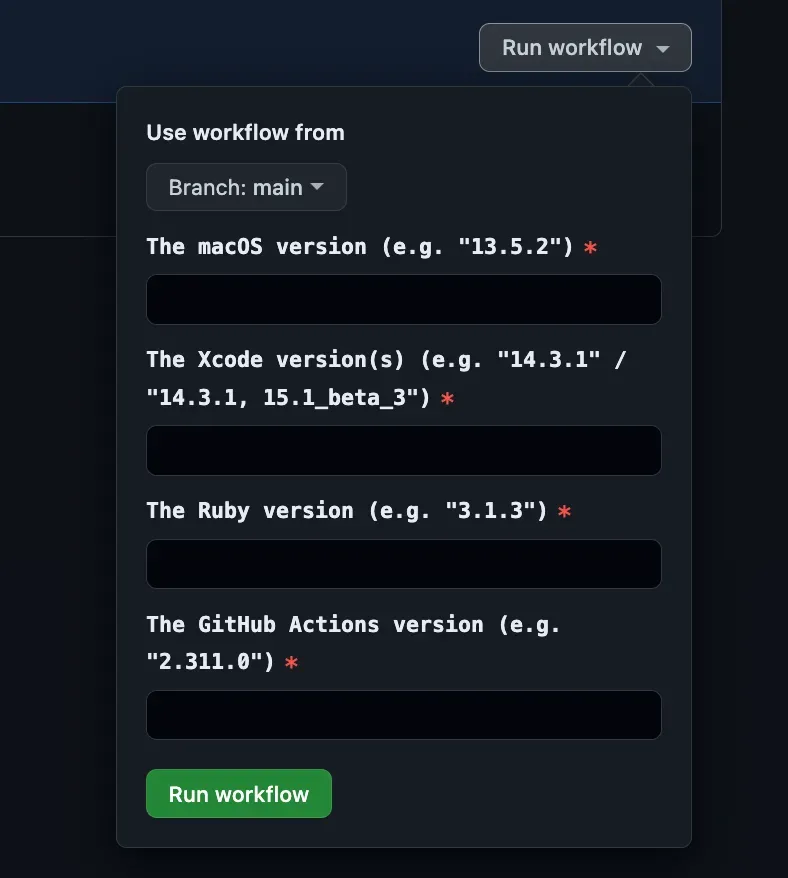

The create_ami workflow accepts inputs that are eventually passed to Packer to generate the AMI.

packer build \ --var ami_name_prefix=${{ env.AMI_NAME_PREFIX }} \ --var region=${{ env.REGION }} \ --var subnet_id=${{ env.SUBNET_ID }} \ --var vpc_id=${{ env.VPC_ID }} \ --var root_volume_size_gb=${{ env.ROOT_VOLUME_SIZE_GB }} \ --var macos_version=${{ inputs.macos-version}} \ --var ruby_version=${{ inputs.ruby-version }} \ --var xcode_versions="${{ steps.parse-xcode-versions.outputs.list }}" \ --var gha_version=${{ inputs.gha-version}} \ bare-metal-runner.pkr.hclDifferent teams often use different versions of software, like Xcode. To accommodate this, we permit multiple versions to be installed on the same instance. The choice of which version to use is then determined within the GitHub Actions workflows.

The seamless generation of AMIs has proven to be a significant enabler. For example, when Xcode 15.1 was released, we executed this workflow the same evening. In just over two hours, we had an AMI ready to deploy all the runners (it usually takes 70–100 minutes for a macOS AMI with 400GB of EBS volume to become ready after creation). This efficiency enabled our teams to use the new Xcode version just a few hours after its release.

IaC (Infrastructure as Code) for macOS instances

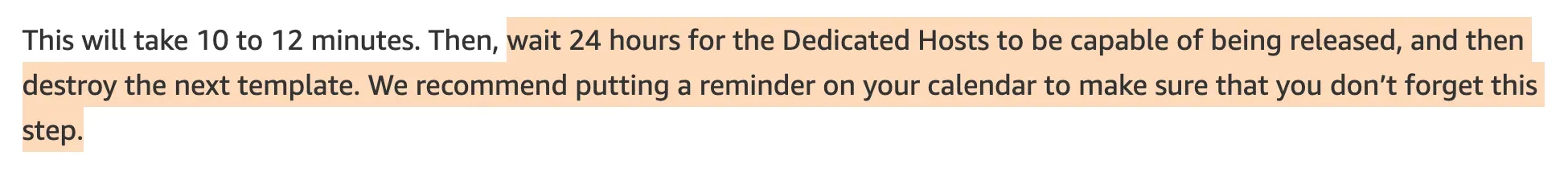

Initially, we used distinct Terraform modules for each instance to facilitate the deployment and decommissioning of each one. Given the high cost of EC2 Mac instances, we managed this process with caution, carefully balancing host usage while also being mindful of the 24-hour minimum allocation time.

We ultimately ended up using Terraform to define a single infrastructure (i.e. a single Terraform module) defining resources such as:

aws_key_pair,aws_instance,aws_amiaws_security_group,aws_security_group_ruleaws_secretsmanager_secretaws_vpc,aws_subnetaws_cloudwatch_metric_alarmaws_sns_topic,aws_sns_topic_subscriptionaws_iam_role,aws_iam_policy,aws_iam_role_policy_attachment,aws_iam_instance_profile

A crucial part was to use count in aws_instance, setting the value of a variable passed in from deploy_infra workflow. Terraform performs the necessary scaling upon changing the value.

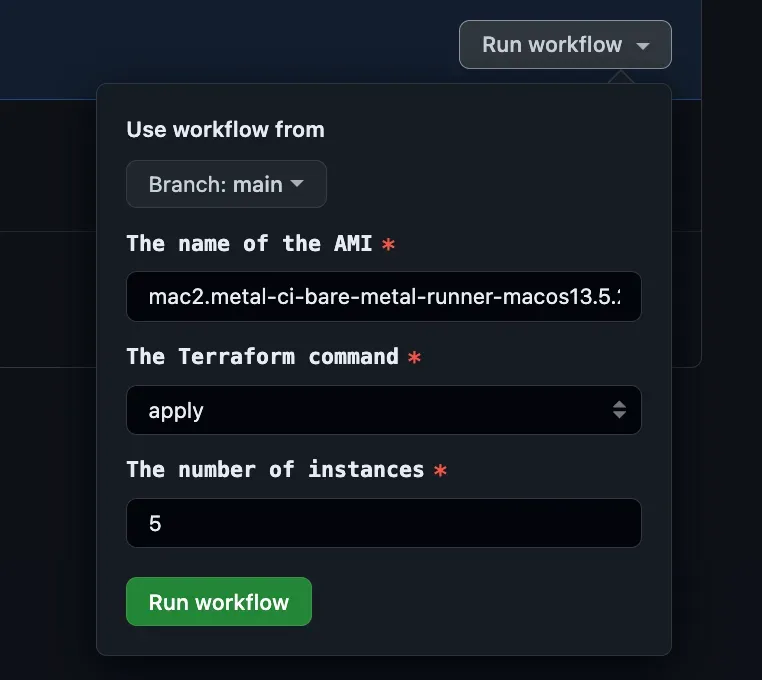

We have implemented a workflow to perform Terraform apply and destroy commands for the infrastructure. Only the AMI and the number of instances are required as inputs.

terraform ${{ inputs.command }} \ --var ami_name=${{ inputs.ami-name }} \ --var fleet_size=${{ inputs.fleet-size }} \ --auto-approveUsing the name of the AMI instead of the ID allows us to use the most recent one that was generated, useful in case of name clashes.

variable "ami_name" { type = string}variable "fleet_size" { type = number}data "aws_ami" "bare_metal_gha_runner" { most_recent = true filter { name = "name" values = ["${var.ami_name}"] } ...}resource "aws_instance" "bare_metal" { count = var.fleet_size ami = data.aws_ami.bare_metal_gha_runner.id instance_type = "mac2.metal" tenancy = "host" key_name = aws_key_pair.bare_metal.key_name ...}Instead of maintaining multiple CI instances with varying software configurations, we concluded that it’s simpler and more efficient to have a single, standardised setup. While teams still have the option to create and deploy their unique setups, a smaller, unified system allows for easier support by a single global configuration.

Auto and Manual Scaling

The deploy_infra workflow allows us to scale on demand but it doesn’t release the underlying dedicated hosts which are the resources that are ultimately billed.

The autoscaling solution provided by AWS is great for VMs but gets sensibly more complex when actioned on dedicated hosts. Auto Scaling groups on macOS instances would require a Custom Managed License, a Host Resource Group and, of course, a Launch Template. Using only AWS services appears to be a lot of work to pull things together and the result wouldn’t allow for automatic release of the dedicated hosts.

AirBnb mention in their Flexible Continuous Integration for iOS article that an internal scaling service was implemented:

An internal scaling service manages the desired capacity of each environment’s Auto Scaling group.

Some articles explain how to set up Auto Scaling groups for mac instances (see 1 and 2) but after careful consideration, we agreed that implementing a simple scaling service via GitHub Actions (GHA) was the easiest and most maintainable solution.

We implemented 2 GHA workflows to fully automate the weekend autoscaling:

- Upscaling workflow to

n, triggered at a specific time at the beginning of the working week - Downscaling workflow to

1, triggered at a specific time at the beginning of the weekend

We retain the capability for manual scaling, which is essential for situations where we need to scale down, such as on bank holidays, or scale up, like on release cut days, when activity typically exceeds the usual levels.

Additionally, we have implemented a workflow that runs multiple times a day and tries to release all available hosts without an instance attached. This step lifts us from the burden of having to remember to release the hosts. Dedicated hosts take up to 110 minutes to move from the Pending to the Available state due to the scrubbing workflow performed by AWS.

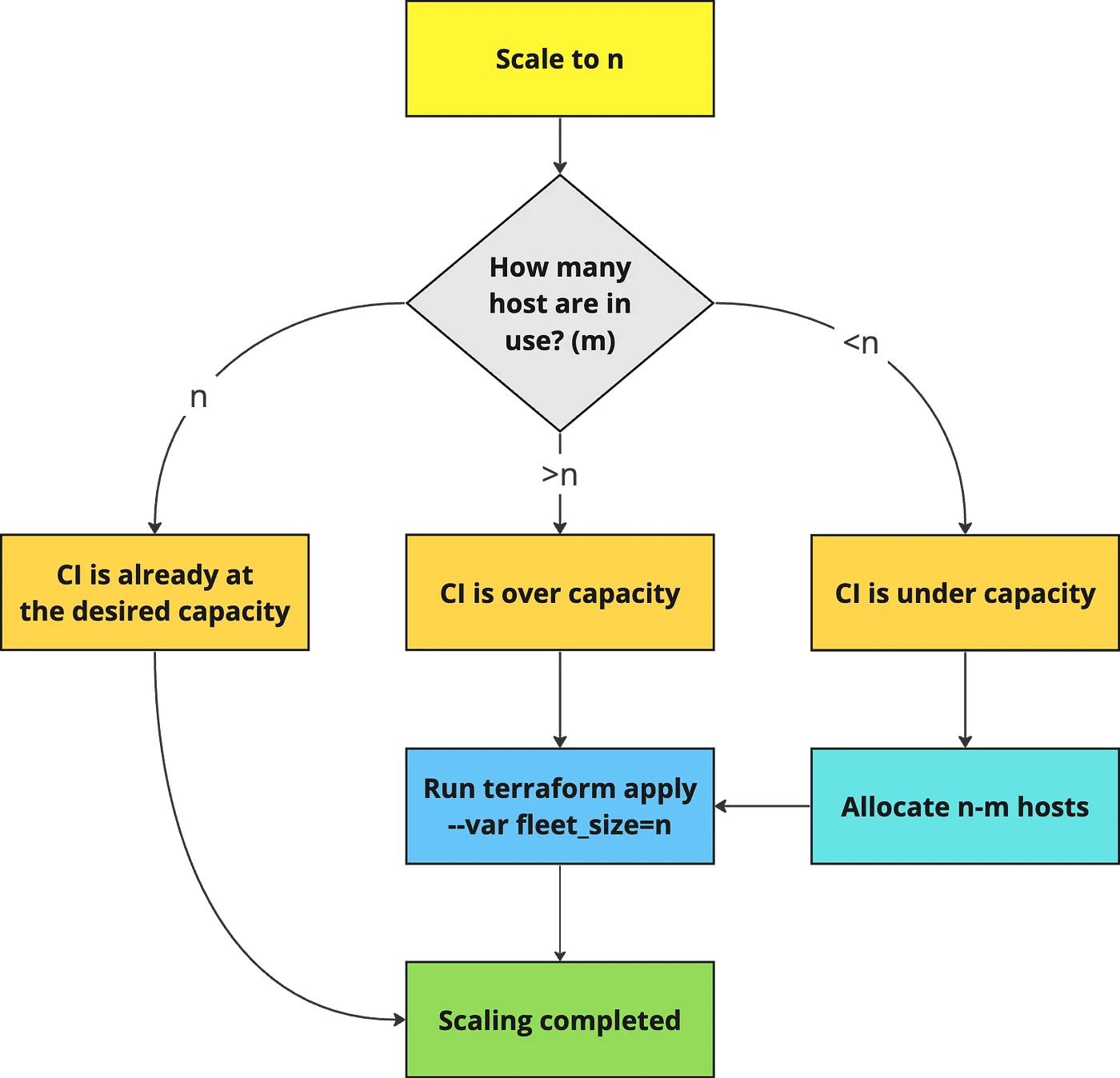

Manual scaling can be executed between the times the autoscaling workflows are triggered and they must be resilient to unexpected statuses of the infrastructure, which thankfully Terraform takes care of.

Both down and upscaling are covered in the following flowchart:

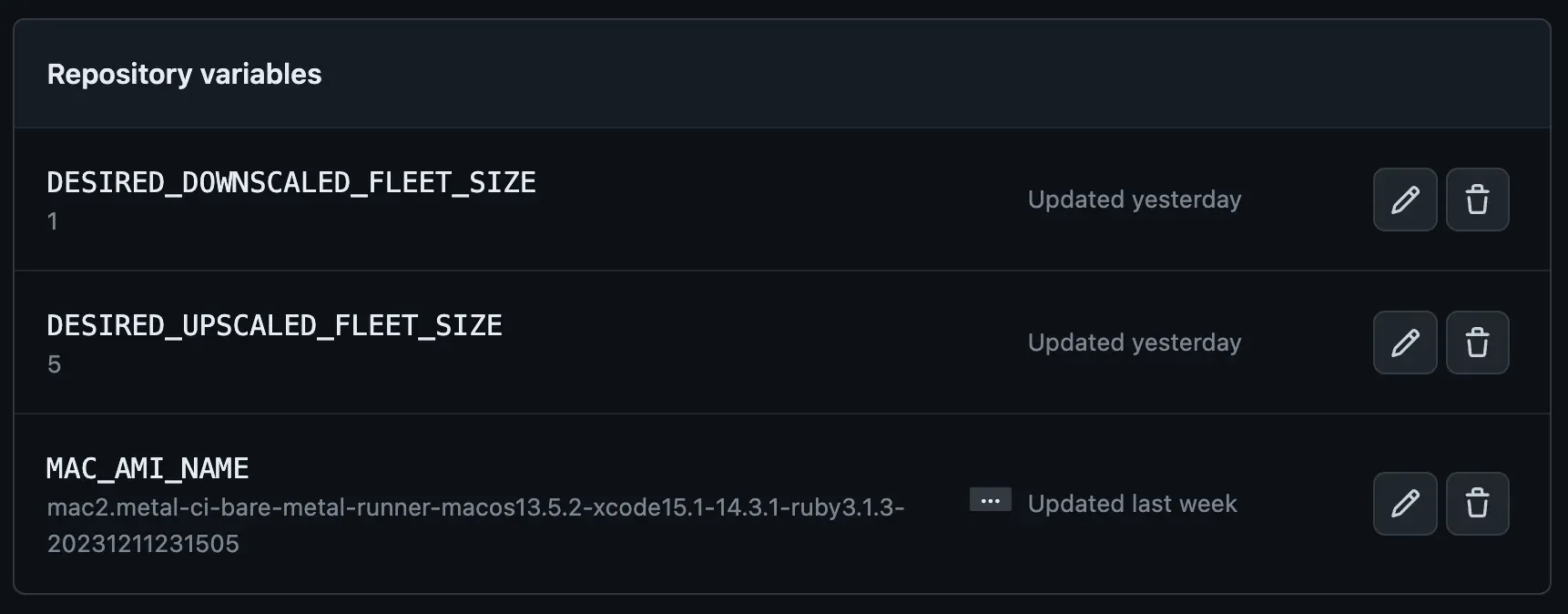

The autoscaling values are defined as configuration variables in the repo settings:

It usually takes ~8 minutes for an EC2 mac2.metal instance to become reachable after creation, meaning that we can redeploy the entire infrastructure very quickly.

Automated Connection to GitHub Actions

We provide some user data when deploying the instances.

resource "aws_instance" "bare_metal" { ami = data.aws_ami.bare_metal_gha_runner.id count = var.fleet_size ... user_data = <<EOF{ "github_enterprise": "<GHE_ENTERPRISE_NAME>", "github_pat_secret_manager_arn": ${data.aws_secretsmanager_secret_version.ghe_pat.arn}, "github_url": "<GHE_ENTERPRISE_URL>", "runner_group": "CI-MobileTeams", "runner_name": "bare-metal-runner-${count.index + 1}"} EOFThe user data is stored in a specific folder by macos-init and we implement a module to copy the content to ~/actions-runner-config.json.

### Group 10 ###[[Module]] Name = "Create actions-runner-config.json from userdata" PriorityGroup = 10 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["/bin/zsh", "-c", 'instanceId="$(curl http://169.254.169.254/latest/meta-data/instance-id)"; if [[ ! -z $instanceId ]]; then cp /usr/local/aws/ec2-macos-init/instances/$instanceId/userdata ~/actions-runner-config.json; fi'] RunAsUser = "ec2-user"which is in turn used by the configure_runner.sh script to configure the GitHub Actions runner.

#!/bin/bashGITHUB_ENTERPRISE=$(cat $HOME/actions-runner-config.json | jq -r .github_enterprise)GITHUB_PAT_SECRET_MANAGER_ARN=$(cat $HOME/actions-runner-config.json | jq -r .github_pat_secret_manager_arn)GITHUB_PAT=$(aws secretsmanager get-secret-value --secret-id $GITHUB_PAT_SECRET_MANAGER_ARN | jq -r .SecretString)GITHUB_URL=$(cat $HOME/actions-runner-config.json | jq -r .github_url)RUNNER_GROUP=$(cat $HOME/actions-runner-config.json | jq -r .runner_group)RUNNER_NAME=$(cat $HOME/actions-runner-config.json | jq -r .runner_name)RUNNER_JOIN_TOKEN=` curl -L \ -X POST \ -H "Accept: application/vnd.github+json" \ -H "Authorization: Bearer $GITHUB_PAT"\ $GITHUB_URL/api/v3/enterprises/$GITHUB_ENTERPRISE/actions/runners/registration-token | jq -r '.token'`MACOS_VERSION=`sw_vers -productVersion`XCODE_VERSIONS=`find /Applications -type d -name "Xcode-*" -maxdepth 1 \ -exec basename {} \; \ | tr '\n' ',' \ | sed 's/,$/\n/' \ | sed 's/.app//g'`$HOME/actions-runner/config.sh \ --unattended \ --url $GITHUB_URL/enterprises/$GITHUB_ENTERPRISE \ --token $RUNNER_JOIN_TOKEN \ --runnergroup $RUNNER_GROUP \ --labels ec2,bare-metal,$RUNNER_NAME,macOS-$MACOS_VERSION,$XCODE_VERSIONS \ --name $RUNNER_NAME \ --replaceThe above script is run by a macos-init module.

### Group 11 ###[[Module]] Name = "Configure the GHA runner" PriorityGroup = 11 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["/bin/zsh", "-c", "/Users/ec2-user/configure_runner.sh"] RunAsUser = "ec2-user"The GitHub documentation states that it’s possible to create a customized service starting from a provided template. It took some research and various attempts to find the right configuration that allows the connection without having to log in in the UI (over VNC) which would represent a blocker for a complete automation of the deployment. We believe that the single person who managed to get this right is Sébastien Stormacq who provided the correct solution.

The connection to GHA can be completed with 2 more modules that install the runner as a service and load the custom daemon.

### Group 12 ###[[Module]] Name = "Run the self-hosted runner application as a service" PriorityGroup = 12 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["/bin/zsh", "-c", "cd /Users/ec2-user/actions-runner && ./svc.sh install"] RunAsUser = "ec2-user"### Group 13 ###[[Module]] Name = "Launch actions runner daemon" PriorityGroup = 13 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["sudo", "/bin/launchctl", "load", "/Library/LaunchDaemons/com.justeattakeaway.actions-runner-service.plist"] RunAsUser = "ec2-user"Using a daemon instead of an agent (see Creating Launch Daemons and Agents), doesn’t require us to set up any auto-login which on macOS is a bit of a tricky procedure and is best avoided also for security reasons. The following is the content of the daemon for completeness.

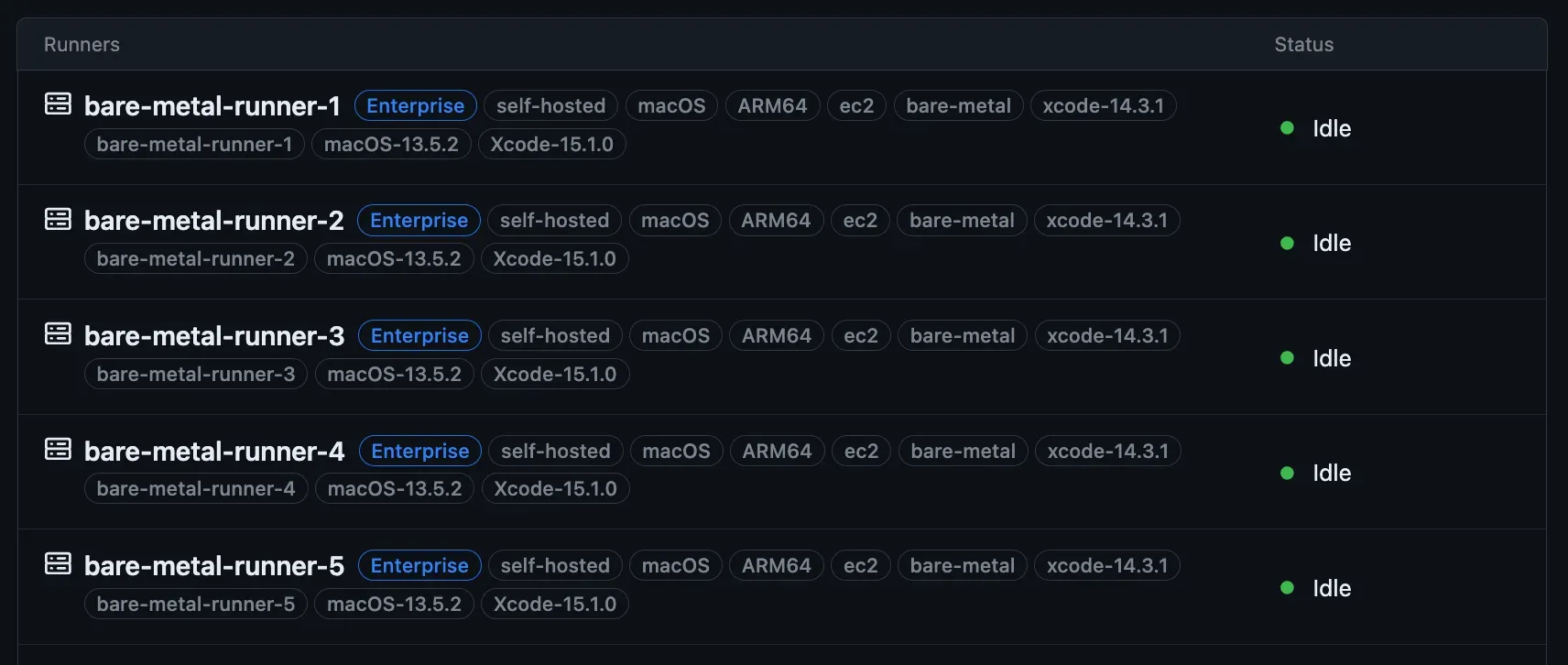

<?xml version="1.0" encoding="UTF-8"?><!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd"><plist version="1.0"> <dict> <key>Label</key> <string>com.justeattakeaway.actions-runner-service</string> <key>ProgramArguments</key> <array> <string>/Users/ec2-user/actions-runner/runsvc.sh</string> </array> <key>UserName</key> <string>ec2-user</string> <key>GroupName</key> <string>staff</string> <key>WorkingDirectory</key> <string>/Users/ec2-user/actions-runner</string> <key>RunAtLoad</key> <true/> <key>StandardOutPath</key> <string>/Users/ec2-user/Library/Logs/com.justeattakeaway.actions-runner-service/stdout.log</string> <key>StandardErrorPath</key> <string>/Users/ec2-user/Library/Logs/com.justeattakeaway.actions-runner-service/stderr.log</string> <key>EnvironmentVariables</key> <dict> <key>ACTIONS_RUNNER_SVC</key> <string>1</string> </dict> <key>ProcessType</key> <string>Interactive</string> <key>SessionCreate</key> <true/> </dict></plist>Not long after the deployment, all the steps above are executed and we can appreciate the runners appearing as connected.

Multi-Team Use

We start the downscaling at 11:59 PM on Fridays and start the upscaling at 6:00 AM on Mondays. These times have been chosen in a way that guarantees a level of service to teams in the UK, the Netherlands (GMT+1) and Canada (Winnipeg is on GMT-6) accounting for BST (British Summer Time) and DST (Daylight Saving Time) too. Times are defined in UTC in the GHA workflow triggers and the local time of the runner is not taken into account.

Since the instances are used to build multiple projects and tools owned by different teams, one problem we faced was that instances could get compromised if workflows included unsafe steps (e.g. modifications to global configurations).

GitHub Actions has a documentation page about Hardening self-hosted runners specifically stating:

Self-hosted runners for GitHub do not have guarantees around running in ephemeral clean virtual machines, and can be persistently compromised by untrusted code in a workflow.

We try to combat such potential problems by educating people on how to craft workflows and rely on the quick redeployment of the stack should the instances break.

We also run scripts before and after each job to ensure that instances can be reused as much as possible. This includes actions like deleting the simulators’ content, derived data, caches and archives.

Centralized Management via GitHub Actions

The macOS runners stack is defined in a dedicated macOS-runners repository. We implemented GHA workflows to cover the use cases that allow teams to self-serve:

- create macOS AMI

- deploy CI

- downscale for the weekend*

- upscale for the working week*

- release unused hosts*

* run without inputs and on a scheduled trigger

The runners running the jobs in this repo are small t2.micro Linux instances and come with the AWSCLI installed. An IAM instance role with the correct policies is used to make sure that aws ec2 commands allocate-hosts, describe-hosts and release-hosts could execute and we used jq to parse the API responses.

A note on VM runners

In this article, we discussed how we’ve used bare metal instances as runners. We have spent a considerable amount of time investigating how we could leverage the Virtualization framework provided by Apple to create virtual machines via Tart.

If you’ve grasped the complexity of crafting a CI system of runners on bare metal instances, you can understand that introducing VMs makes the setup sensibly more convoluted which would be best discussed in a separate article.

While a setup with Tart VMs has been implemented, we realised that it’s not performant enough to be put to use. Using VMs, the number of runners would double but we preferred to have native performance as the slowdown is over 40% compared to bare metal. Moreover, when it comes to running heavy UI test suites like ours, tests became too flaky.

Testing the VMs, we also realised that the standard values of Throughput and IOPS on the EBS volume didn’t seem to be enough and caused disk congestion resulting in an unacceptable slowdown in performance.

Here is a quick summary of the setup and the challenges we have faced.

- Virtual runners require 2 images: one for the VMs (tart) and one for the host (AMI).

- We use Packer to create VM images (Vanilla, Base, IDE, Tools) with the software required based on the templates provided by Tart and we store the OCI-compliant images on ECR. We create these images on CI with dedicated workflows similar to the one described earlier for bare metal but, in this case, macOS runners (instead of Linux) are required as publishing to ECR is done with tart which runs on macOS. Extra policies are required on the instance role to allow the runner to push to ECR (using

temporary_iam_instance_profile_policy_documentin Packer’s Amazon EBS). - Apple set a limit to the number of VMs that can be run on an instance to 2, which would allow to double the size of the fleet of runners. Creating AMIs hosting 2 VMs is done with Packer and steps include cloning the image from ECR and configuring macos-init modules to run daemons to run the VMs via Tart.

- Deploying a virtual CI infrastructure is identical to what has already been described for bare metal.

- Connecting to and interfacing with the VMs happens from within the host. Opening SSH and especially VNC sessions from within the bare metal instances can be very confusing.

- The version of macOS on the host and the one on the VMs could differ. The version used on the host must be provided with an AMI by AWS, while the version used on the VMs is provided by Apple in IPSW files (see ipsw.me).

- The GHA runners run on the VMs meaning that the host won’t require Xcode installed nor any other software used by the workflows.

- VMs don’t allow for provisioning meaning we have to share configurations with the VMs via shared folders on the host with the

— dirflag which causes extra setup complexity. - VMs can’t easily run the GHA runner as a service. The

svcscript requires the runner to be configured first, an operation that cannot be done during the provisioning of the host. We therefore need to implement an agent ourselves to configure and connect the runner in a single script. - To have UI access (a-la VNC) to the VMs, it’s first required to stop the VMs and then run them without the

--no-graphicsflag. At the time of writing, copy-pasting won’t work even if using the--vncor--vnc-experimentalflags. - Tartelet is a macOS app on top of Tart that allows to manage multiple GitHub Actions runners in ephemeral environments on a single host machine. We didn’t consider it to avoid relying on too much third-party software and because it doesn’t have yet GitHub Enterprise support.

- Worth noting that the Tart team worked on an orchestration solution named Orchard that seems to be in its initial stage.

Conclusion

In 2023 we have revamped and globalised our approach to CI. We have migrated from Jenkins to GitHub Actions as the CI/CD solution of choice for the whole group and have profoundly optimised and improved our pipelines introducing a greater level of job parallelisation.

We have implemented a brand new scalable setup for bare metal macOS runners leveraging the HashiCorp tools Packer and Terraform. We have also implemented a setup based on Tart virtual machines.

We have increased the size of our iOS team over the past few years, now including more than 40 developers, and still managed to be successful with only 5 bare metal instances on average, which is a clear statement of how performant and optimised our setup is.

We have extended the capabilities of our Internal Developer Platform with a globalised approach to provide macOS runners; we feel that this setup will stand the test of time and serve well various teams across JET for years to come.

إرسال تعليق